Are ChatGPT & AI Threats in Your Data Security Training?

Data Security takes on a whole new meaning in the age of Artificial Intelligence. While the tools themselves may be game-changers regarding workload, they don’t come without a price for security.

Data Security takes on a whole new meaning in the age of Artificial Intelligence. While the tools themselves may be game-changers regarding workload, they don’t come without a price for security.

ChatGPT usage is on the rise. And it’s easy to see why. An article that may take a company 10 hours to research, write, edit, and deploy SEO can be done within minutes. Regardless of whether it still needs editing and fact-checking – it’s apparent that it’s a tremendous time saver. And that’s just for writing. These systems are used for coding, art, design, and more.

The risk lies in the extensive data sets that contain a lot of confidential and sensitive information. For companies using tools like ChatGPT, you must create processes and procedures around them. Where are you logging in? How are you feeding the prompts? It’s one thing to ask ChatGPT to make an article based on already public information, but when you provide it with corporate IP, you open up a data security problem. The issue is that people need to learn how far and wide that information carries and what it can be used for. And the potential problems don’t stop there.

Consider a learning tool that could, if nefariously used by the wrong persons, create a massive false narrative around the political arena, national security, and more. Whoever is feeding the information or misinformation is teaching it. Will people continue using their discernment to fact-check what this tool tells them?

Let’s dig into a few more issues.

- Because the tool learns how real people interact, the capabilities are endless for sending out phishing scams that even the most diligent may fall for. These communications will seem more human-like, making them harder to recognize. Companies must start making their employees aware now.

- ChatGPT could be used to write hacking code itself. While this is supposed to be avoidable, the fact that it’s already being experimented with should tell us it’s only a matter of time.

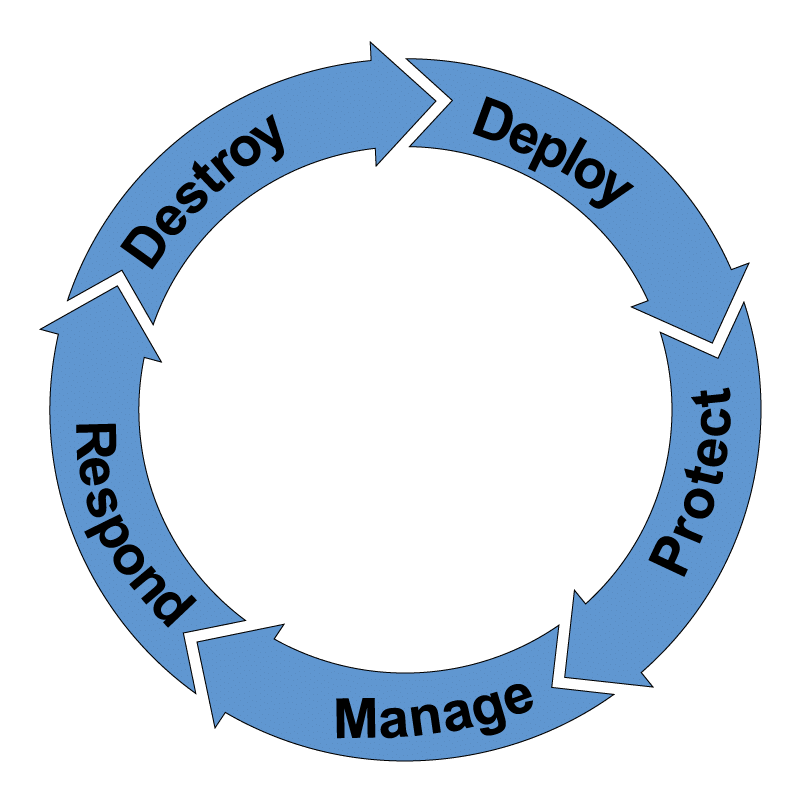

While many businesses celebrate the rise of Artificial Intelligence and what it can mean to get the job done faster and more accurately, we must recognize the security and ethical issues arising from this rapidly advancing technology. If the above concerns, at a minimum, still need to be addressed in your data security training and policies, start including them today.

Reclamere can help. Get in touch for a deep-dive discussion into staying ahead of the curve with AI and data security. Additionally, you can hear more from our CEO, Angie Singer Keating, as she highlights a few AI data security challenges that were presented at RSA Conference 2023 in a recent LinkedIn Live.